1. Why Bulk Inserts Matter?

When dealing with large datasets for example, you want to import a CSV containing more than a thousand of tasks from your department, inserting records one by one (.create or .save) can drastically slow down performance and cause database bottlenecks

Imagine inserting 100,000 records—doing it traditionally means 100,000 separate queries! Instead, bulk inserting reduces this to a single efficient query, saving time and resources.

2. Prerequisites

Before diving in, ensure:

- You’re using Rails 6+ for

insert_all - You have the

activerecord-importgem installed if using an older version which supports Rails 3.x

3. Step-by-Step Guide

3.1 The Problem With Each-By-Each Inserts

For example, you want to create 100k records of tasks by a naive way, this is how it look like:

|

|

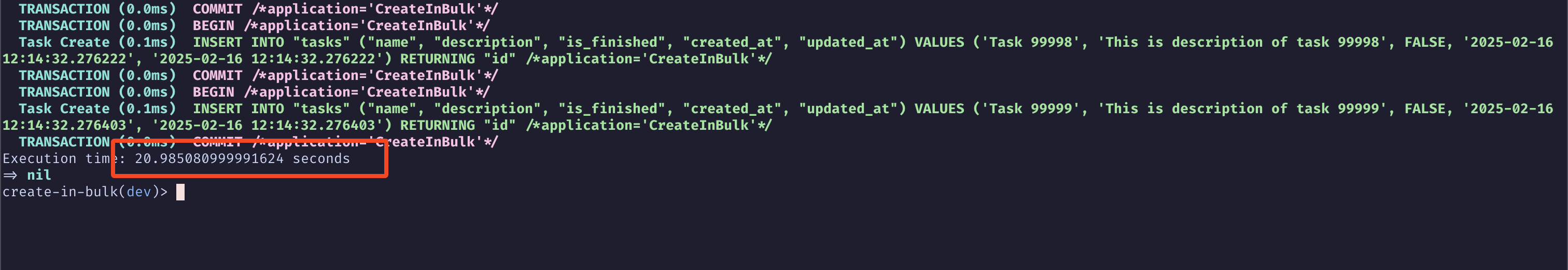

I did some benchmark here with this snippet:

|

|

Image shows that the execution of record creation takes 20.99 seconds

✅ Pros:

- Models validation or callbacks works -

the name field is not null but there is a call with null name, the insert will be raise with errors - Older Rails versions < 6.0 support - Legacy project without bulk insert support.

❌ Cons:

- Performance Issue - Each insert requires a separate database transaction, increasing overhead.

- Increased Network Traffic - 100k request will be made into the database which increases network costs.

3.2 Solution 1: Use insert_all

The insert_all method allows you to insert multiple records at once, significantly boosting performance.

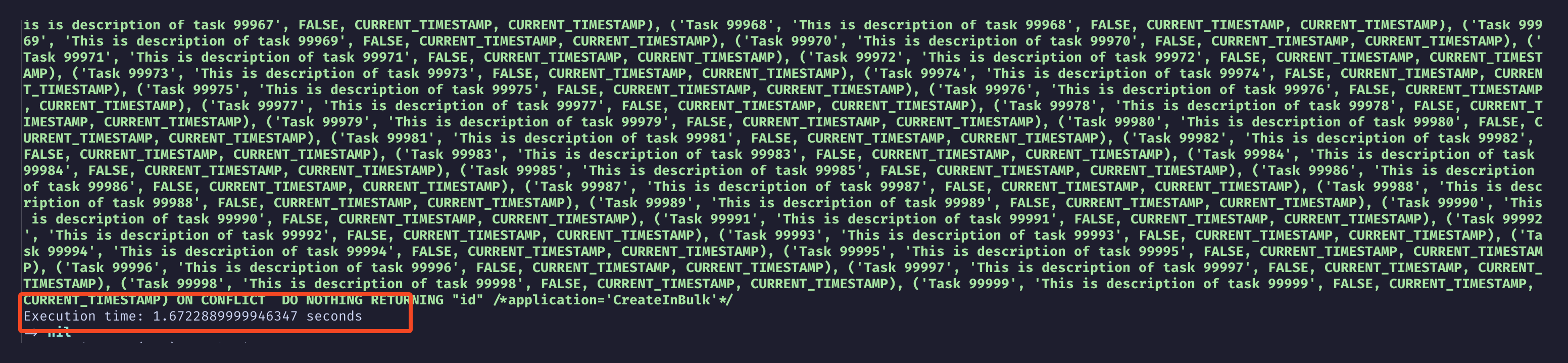

Example: Using insert_all for create 100k tasks

|

|

Image shows that the execution of record creation takes 1.67 seconds

✅ Pros:

- Faster than

.create- Single SQL query - Rails built-in method - Bulk operations directly in Rails without extra gems.

❌ Cons:

- Does not accept ActiveRecord models – Only works with raw hashes.

- Bypasses model validations and callbacks – Data integrity must be handled manually.

- Cannot handle associations automatically – Requires extra queries to fetch related IDs.

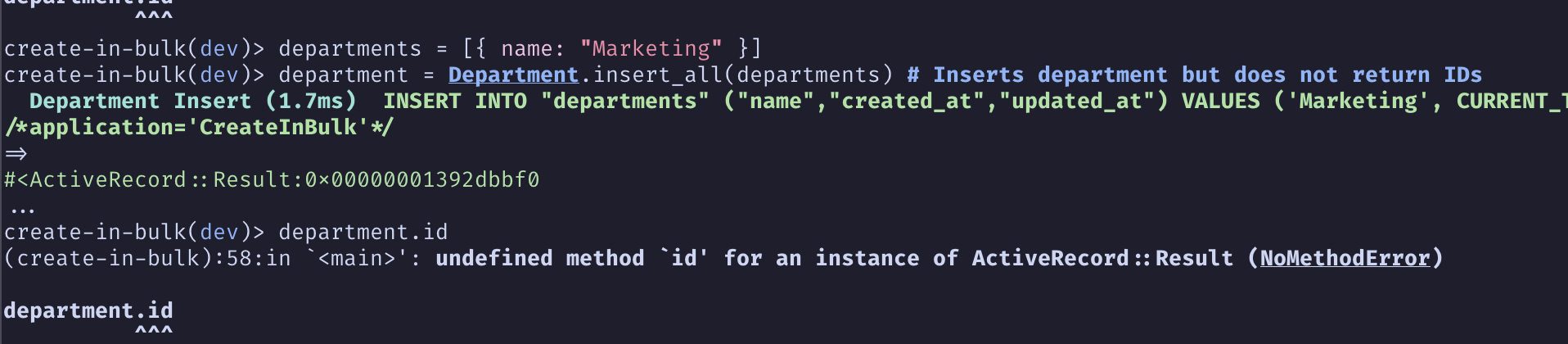

Example: Inserting Tasks that Belong to Department

|

|

Image shows that no id can be returned after using insert_all

👉 Fix: Manually retrieve department_id before inserting tasks, adding an extra query.

|

|

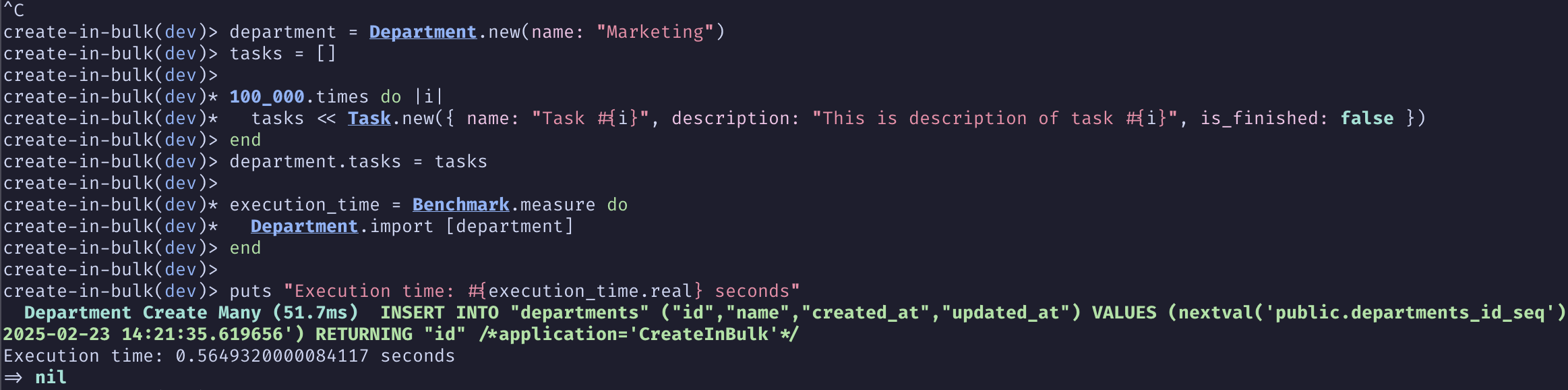

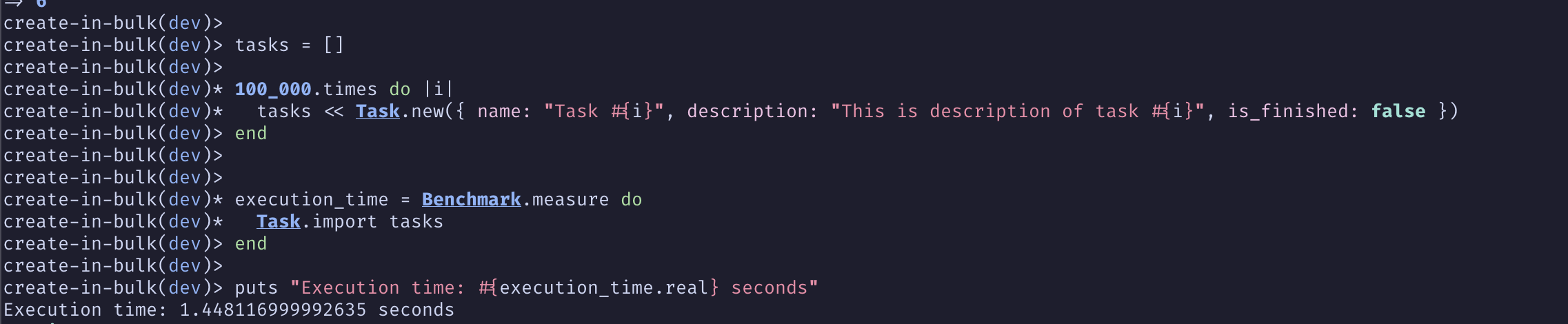

3.3 Solution 2: Best of both worlds - Use activerecord-import gem

The import method allows you to insert models with associations

Example: Using import to create 100k tasks in 1 department

|

|

-

Able to import 1 department with 100k tasks with the use of Activerecord-import

-

Observe the performance compare to

insert_allis similar to each other ( About 1.44 seconds )

✅ Pros:

- Works with raw columns and arrays of values (fastest)

- Works with model objects (faster)

- Performs validations (fast)

- Performs on duplicate key updates (requires MySQL, SQLite 3.24.0+, or Postgres 9.5+)

❌ Cons:

- Need an extra gem installed

- ActiveRecord callbacks related to creating, updating, or destroying records (other than

before_validationandafter_validation) will NOT be called when calling the import method. ( calling separately withrun_callbacksas recommended )

4. Conclusion

One rule to remember when inserting a large number of records ( Example: Import a large number of records from a CSV file ) is to avoid creating records one-by-one. Instead, we can consider using activerecord-import or insert_all for a great performance.

5. Reference

- activerecord-import: https://github.com/zdennis/activerecord-import

- insert_all: https://apidock.com/rails/v6.0.0/ActiveRecord/Persistence/ClassMethods/insert_all